Categories

Change Password!

Reset Password!

Artificial intelligence (AI) represents a formidable and transformative field, with the capacity to fundamentally revolutionize both the field of medicine and the way healthcare services are provided. [1] It refers to the application of computers and technology to mimic intelligent reasoning and problem-solving akin to that of humans. [2] Human intelligence stands as one of the most remarkable outcomes of evolutionary processes. [3] John McCarthy initially coined the term AI in 1956 to denote the field of creating intelligent machines through science and engineering. [2]

A critical aspect of the brain's cognitive prowess lies in its capacity to construct intricate models, aiming to create precise representations of the complex world around us. This modeling enables us to make predictions effectively, allowing us to navigate and interact successfully with our environment.

In contrast, AI encompasses a wide range of processes that empower computers to perform tasks typically requiring human intelligence. Machine learning is a specific subset of AI that relies on datasets that serve as representations of specific learning environments. [3] These datasets are frequently extensive, possibly containing millions of distinct data points. [4]

In this, patterns are extracted from data and these patterns serve either to enhance our understanding of the underlying data or to make predictions based on the information gleaned from it. In the past decade, there have been remarkable advancements in the field of machine learning, particularly with the emergence of deep artificial neural networks, also known as deep neural networks (DNN). [3] Artificial DNNs, originally inspired by the human brain, empower computers to excel in solving cognitive tasks that humans are proficient at. [5] However, it is likely to take many years, or perhaps even decades, for machine learning or AI to fully encompass the wide range of human intelligence.

Nevertheless, AI, in the form of machine learning, is already achieving outcomes that surpass human capabilities in certain medical domains. Nonetheless, as these methods continue to evolve, it is imperative that critical and unbiased expertise accompany their development. This ensures that the medical field remains committed to its principle of delivering the best possible patient care. The medical profession, in particular, bears a unique responsibility in this regard. [3]

This article offers a comprehensive overview of the crucial aspects involved in evaluating the quality, usefulness, and constraints of AI applications in the context of patient care.

Need for AI in Healthcare

AI, a field of science and engineering, focuses on creating intelligent machines. These machines follow algorithms or a set of rules designed to replicate human cognitive functions like learning and problem-solving. AI systems have the potential to anticipate issues or address problems as they arise, operating in a deliberate, intelligent, and adaptable manner. [1] AI has the capacity to make significant strides toward the objective of rendering healthcare more personalized, predictive, preventive, and interactive. [6]

One of AI's key strengths lies in its capacity to learn and identify patterns and relationships within large, complex datasets with multiple dimensions and types of information. For instance, AI systems can distill a patient's extensive medical history into a single numerical representation that suggests a likely diagnosis. Furthermore, AI systems are dynamic and self-adjusting, continually acquiring knowledge and adjusting as additional data becomes accessible. It's crucial to note that AI is not a single, all-encompassing technology; instead, it encompasses various subfields like machine learning and deep learning, which, either independently or in combination, enhance the intelligence of applications. [1]

Healthcare systems worldwide are grappling with significant challenges as they strive to attain the "quadruple aim" in healthcare. This entails improving population health, enhancing the patient's care experience, elevating the caregiver's experience, and minimizing escalating costs of healthcare. These challenges are exacerbated by factors such as aging populations, a growing burden of chronic illnesses, and the escalating global healthcare expenses. Regulators, governments, providers, and healthcare payers are facing pressure to innovate and revamp healthcare delivery models in response.

Furthermore, the ongoing global pandemic has accelerated the urgency of healthcare systems to both deliver effective, high-quality care (referred to as "perform") and transform care on a large scale. This transformation involves leveraging insights from real-world data directly into patient care. The pandemic has also underscored the shortages in the healthcare workforce and disparities in access to care. Utilizing technology and AI in healthcare has the potential to tackle some of these issues related to supply and demand.

The growing accessibility of diverse data types like genomics, economic, demographic, clinical, and phenotypic, combined with advancements in mobile technology, the Internet of Things (IoT), computing capabilities, and data security, signifies a pivotal moment where healthcare and technology are merging. This convergence holds the promise of fundamentally reshaping healthcare delivery models through AI-enhanced healthcare systems. [1]

Data as the Basis of Machine Learning

Machine learning is a statistical method used to create models from data and acquire knowledge by training these models with data. [7] It is one of the most prevalent types of AI and has the capability to uncover previously undiscovered connections, formulate fresh theories, and steer researchers and resources towards the most promising pathways. Within the field of medicine, machine learning finds extensive application in constructing automated clinical decision-making systems. [7, 8]

The utilization of data analysis and computer algorithms, particularly in machine learning and data simulation, has led to the emergence of novel clinical models within the field of clinical science. Moreover, the integration of extensive datasets into healthcare informatics, facilitated by the proliferation of online data sources, has spurred the development of innovative approaches for examining this data. These approaches include deep learning as well as data simulation techniques, such as network analysis employing graph theory. [9]

Deep learning, a subset of machine learning, emulates the functioning of the human brain through the utilization of multiple layers of artificial neural networks to autonomously generate predictions based on training data sets. [8] In AI, machine learning algorithms are tasked with identifying patterns or abstract rules within the training data. These learned patterns are subsequently applied to novel data to identify specific characteristics, make predictions, or generate statements. Conceptually, machine learning bears a striking resemblance to human learning, which encompasses learning from examples and recognizing similarities and differences in the information presented.

The challenges faced by AI applications become more pronounced when dealing with unstructured data and the necessity to integrate various data types. For instance, there are AI-based methods capable of detecting breast cancer in mammograms with sensitivity and specificity comparable to an average radiologist (though not yet at the level of an expert). However, when it comes to utilizing AI to extract meaningful insights from a diverse range of unstructured data sources like DNA sequences, histopathological images, and laboratory results, practical success has been elusive.

Additionally, the utilization of extensive medical datasets always carries the hazard of infringing upon individual privacy rights, potentially leading to legal restrictions in compliance with data protection laws. Presently, the restricted availability and quality of complex and diverse data represent persistent conflicts in numerous domains, hindering the effective execution of AI applications in the field of medicine. [3]

Machine Learning Concepts in the Medical Domain

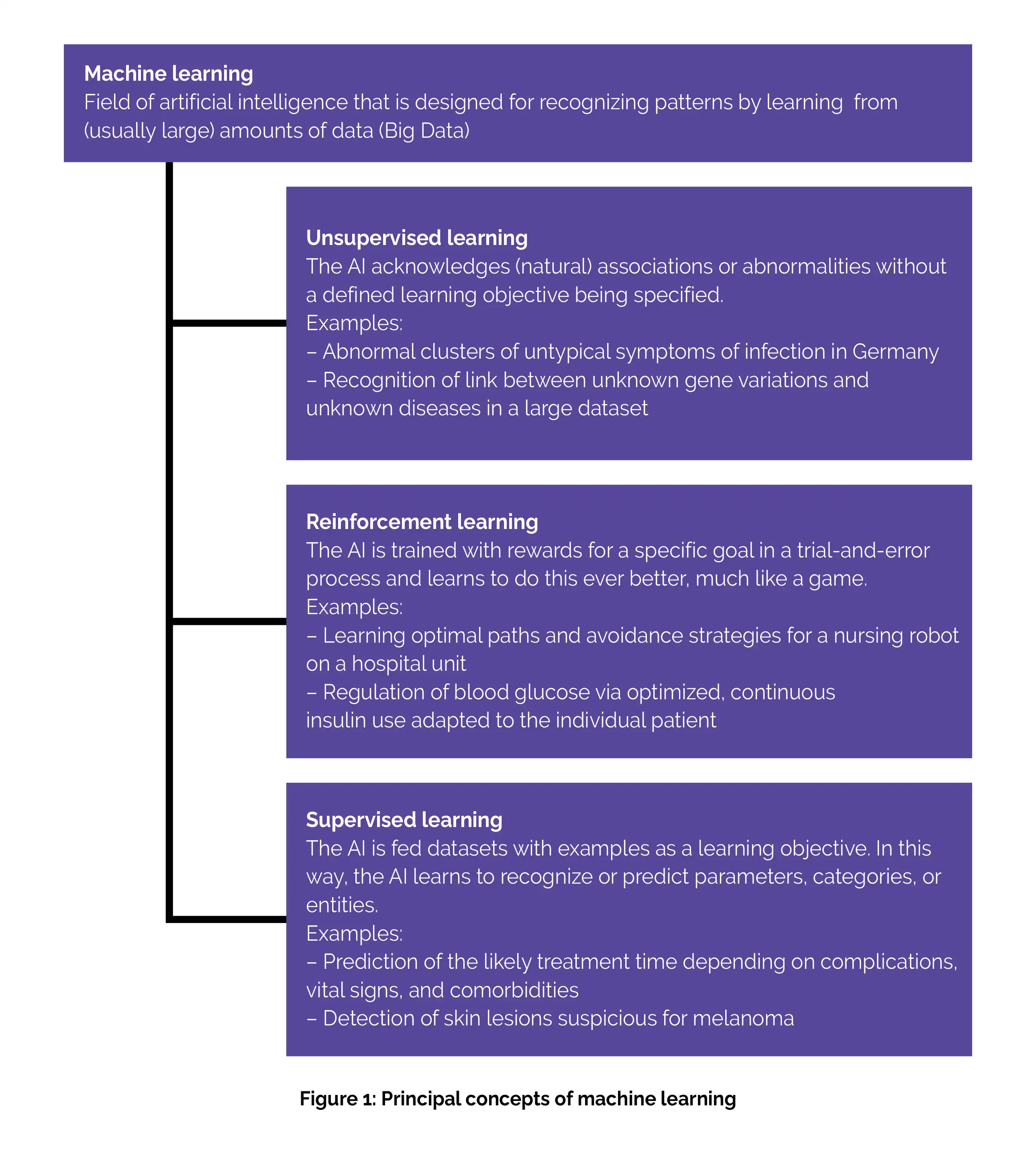

Machine learning approaches can be categorized into three main groups (as shown in Figure 1):

(a) Unsupervised learning: This strategy aims to discover connections, structures, or anomalies in data without specific instructions or labels. For instance, unsupervised learning can be employed to identify subgroups within complex multiomics datasets. While in patient care, unsupervised learning methods are still in the experimental phase, they hold potential for future applications. One example could be their use in syndromic surveillance, possibly as a component of outbreak monitoring for infectious diseases.

(b) Reinforcement learning: It is a training approach that relies on rewards given for specific outcomes. In the medical field, this approach has only been explored in research studies thus far. However, it holds the potential for future applications, such as customizing insulin administration for individual patients using a closed-loop approach. [3]

(C) Supervised learning: As the term suggests, the supervised learning approach mandates the provision of input data, typically supplied by an expert in the field, from which the algorithm can acquire knowledge. This input data is referred to as labeled input. Through supervised learning, the algorithm learns from these labeled inputs, enabling it to produce accurate outputs when processing new, unlabeled data. [9] Such approaches commonly focus on tasks like data classification or predicting future events. These algorithms are trained using labeled training data where the desired learning objective is defined.

For instance, in medical applications, this might involve training with X-ray images that have marked abnormalities and images for comparison without any abnormalities. The patterns learned during training are subsequently assessed for their quality on test datasets. The majority of AI applications that have already received marketing authorization are rooted in supervised learning and operate on uniform, single-mode data. For example, they may analyze only images of potential skin lesions to identify malignant ones. [3]

AI Applications: Risks and Limitations

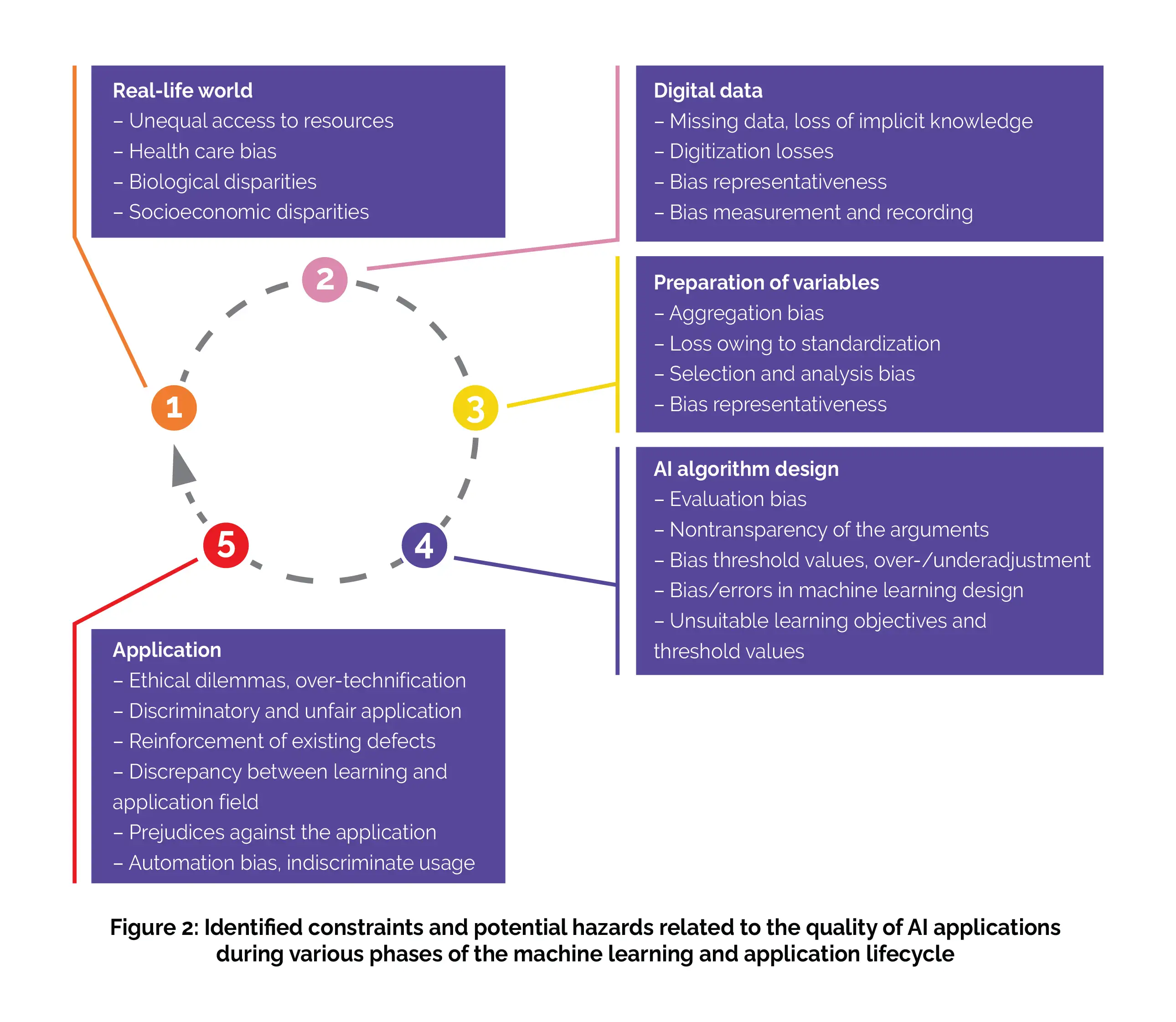

To comprehensively evaluate the risks and limitations in the validity of machine learning applications, it's crucial to understand the machine learning lifecycle, which encompasses several closely interconnected stages. The initial stage involves capturing real-life conditions and representing them as digital data as clearly as possible. The relevant variables are then carefully chosen and prepared, a process known as feature selection and engineering (although this step is somewhat simplified in DNNs). Subsequently, these data are analyzed by the machine learning algorithm.

The outcomes of this analysis are utilized by users, typically physicians, and, in turn, have a tangible impact on the real world, particularly in terms of patient treatment. At every stage of the machine learning lifecycle, numerous partially overlapping factors come into play, which can substantially skew the outcomes of an AI application and constrain its reliability. These limitations are primarily accountable for the fact that the practical integration of AI into patient care often falls below the anticipated levels of success and optimism, especially in various domains. Consequently, a critical examination of the distinct stages within the machine learning lifecycle is indispensable for offering a realistic assessment of the capabilities and merits of machine learning applications.

(a) Real-life world

Individuals reside in the real world, which typically exhibits socio-economic, biological, and various other disparities that can pose risks or disadvantages for certain individuals or groups of the population. During the data collection process that forms the foundation of an AI application, it's imperative to consider and, when required, rectify potential biases of this nature. [3]

(b) Digital data

Data from digital technologies are progressively being integrated into public health research and are anticipated to transform medicine. [10,11] However, in essence, data can only offer a partial and incomplete representation of the real world. To attain a reasonably accurate portrayal of reality, the data collection process must prioritize objectivity, precision, and accuracy. Additionally, when choosing data sources, it's essential to ascertain they are adequately representative. However, a substantial amount of medical information, especially individual-specific data, can only be recorded in the form of text, utilizing the intricacies of natural language.

This results in unstructured data that require preprocessing through natural language processing techniques. Moreover, information that cannot be digitally documented generally cannot be harnessed for AI applications. For example, forming a comprehensive assessment of a patient's overall clinical condition based on experience and intuition cannot easily be translated into a format usable by AI systems.

(c) Selection and preparation of variables

To achieve the most accurate representations of the real world through AI applications, it's essential to carefully choose and prepare the variables used in these applications. For instance, in oncology diagnostics, variables might be confined to X-ray findings and specific clinical parameters. However, this process of variable selection, along with the subsequent standardization and normalization of data, can potentially diminish their representativeness and constrain the validity of the results generated by an AI application.

(d) Algorithm design

The process of designing a machine learning algorithm involves tasks such as programming the software code and integrating the preselected variables. However, during this stage, errors and biases can emerge, often due to insufficient consideration of unique data characteristics, incorporation of inappropriate cut-off values, or vague definitions of pattern recognition objectives. To promote user acceptance and encourage critical evaluation of the algorithm's design, it's essential for the algorithm to be capable of providing explanations for the results it produces in each specific case. This capacity for result explanation is commonly referred to as "explainability".

(e) Application in the real world

During the practical application stage, which involves patient care, errors and biases that may have occurred at any of the previously mentioned stages in the machine learning lifecycle can have detrimental effects. Specific risks arise from unaccounted-for disparities between the learning environment and the real-world application, as well as from a lack of alignment of AI applications with their intended practical use.

Inaccurate outcomes, technical challenges, inadequate transparency, and a lack of trust can swiftly hinder the realization of the full potential of AI applications in patient care. For instance, when AI-based programs designed for the analysis of histopathological findings cannot be seamlessly integrated into existing workflows or do not result in time savings, they may not deliver their expected benefits.

Conversely, uncritical and overly confident utilization of AI applications can have adverse consequences, such as overlooking critical considerations in differential diagnosis during practical medical practice.

In essence, the uncritical pursuit of AI-driven treatment approaches comes with the risk of stripping medicine of essential human elements through excessive reliance on technology. For instance, the nearly objective estimation of outcome probabilities illustrates a significant challenge regarding nuanced communication between patients and doctors. Ethical concerns could also intensify if the outcomes generated by AI applications are utilized without thoughtful consideration as the base for decisions concerning resource prioritization and allocation in healthcare. [3]

Assessment of Quality and Utility of Clinical AI Applications

(a) Evidence base for the assessment of machine learning applications

A cornerstone requirement in modern medicine is the establishment of a scientific foundation. Consequently, it must be possible to transparently evaluate the objectivity (absence of uncontrolled influencing factors), validity, and authenticity of AI-driven medical applications. To gauge AI algorithms quality for making decisions, statistical test metrics such as specificity, sensitivity, and precision (positive predictive value) are frequently employed. These measures should be supplemented by a critical assessment of bias and risks throughout the entire machine learning lifecycle (Figure 2).

Moreover, an evidence-based assessment of usefulness entails examining the method's performance in a real-world context and comparing it to alternative approaches, akin to the methodology employed in a clinical trial. For example, this could involve a prospective intervention study that contrasts an AI-based diagnostic procedure with a traditional one. Depending on the specific medical application, it's important to consider relevant patient-specific outcomes like quality of life, survival rates, disease progression, and symptom alleviation, in addition to accuracy when evaluating AI systems.

Ideally, the enhancement of these patient-specific outcomes should be a consideration from the early stages of training an AI application. This approach is essential for the application to have the potential to outperform human diagnosis and treatment assessments. However, up to this point, only a limited number of prospective studies have compared AI applications to existing medical care standards or demonstrated their utility in this context. A thorough evaluation of the additional advantages provided by an AI application encompasses more than just assessing potential risks to patient safety. It also entails examining aspects like cost-effectiveness (including potential time and resource savings) and considering the ethical and sociocultural implications.

(b) Real-world application of machine learning-based tools in patient care

As of now, the US Food and Drug Administration (FDA) has granted authorization for 521 medical AI applications. In Germany, official data is not readily available, but it is reasonable to assume there have been only a few dozen authorizations up to this point. When looking at the number of publications related to AI, the actual impact of approved applications in Germany appears relatively limited. Several factors contribute to this situation, including the previously mentioned limitations related to data availability, the difficulty of transitioning AI applications from the learning phase to practical use, and the challenges associated with effectively and economically integrating them into existing healthcare processes.

Typically, AI applications for patient care are considered medical devices and can only be marketed or used after undergoing a conformity assessment based on their respective risk classification. Technically, their approval primarily pertains to providing decision support and requires that the ultimate responsibility remains with the physicians who use them. Consequently, the indiscriminate use of these methods, such as relying on them blindly (which may lead to an automation bias), can carry risks.

Furthermore, it has not been mandatory until now to publish utility-focused studies when seeking approval for medical devices.

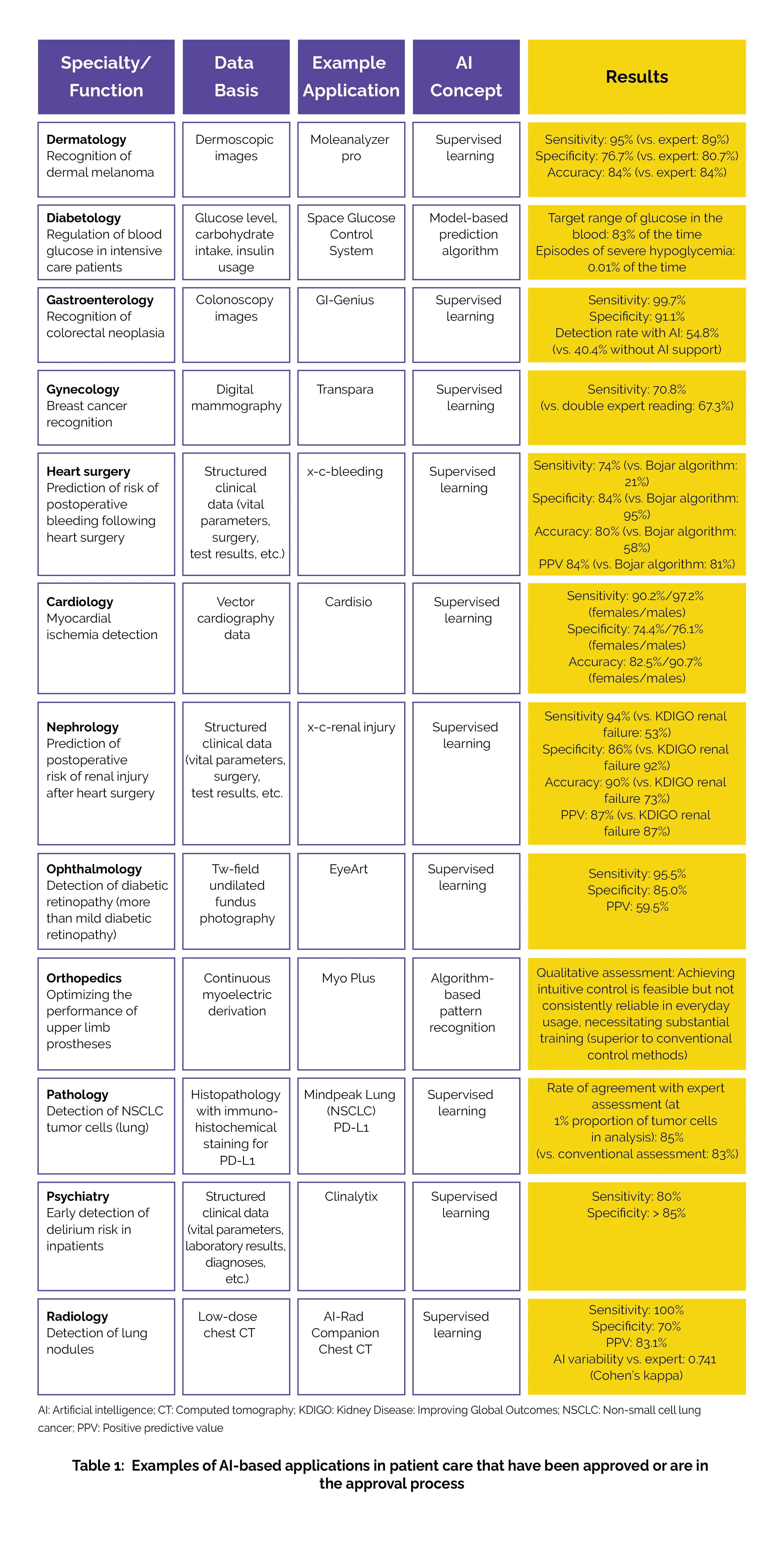

Instead, approval has often been based solely on functionality tests without any accompanying scientific research, or the studies conducted have been in controlled, artificial environments. Consequently, the quality and usefulness of numerous machine learning-based applications already in usage in Germany are not presented in a transparent way. Table 1 below provides an overview of several systems that have been authenticated or are in the process of approval in Germany, along with examples of the existing scientific proof for each situation.

The majority of these applications rely on single-mode, uniform data. The overall landscape of published evidence for approved AI applications is quite diverse and, at times, lacks clarity. For certain techniques, we can assess their utility based on published application studies. For instance, machine learning-assisted colonoscopic detection of colorectal polyps and photo-based detection of malignant skin lesions have demonstrated performance levels on par with standard techniques. However, in the case of other authorized applications, either there is no data regarding clinical utility or only specific statistical parameters (such as sensitivity and specificity) have been made available.

In some instances, the utility of AI applications is not tied to an additional clinical benefit but rather stems from more efficient processes or a reduction in treatment barriers. This is particularly evident in situations where specialized expertise is lacking on-site (e.g., identifying rare electrocardiographic findings) or when high throughput is essential (e.g., mammography screening). Finally, AI applications can considerably enhance practical utility by delivering cost savings and improving access to specific treatments in underserved regions. This can be observed in cases like the diagnosis of malignant melanoma and diabetic retinopathy. [3]

Conclusion

The use of AI in medical settings has grown significantly, leading to enhanced diagnostic precision, more efficient treatment strategies, and better results for patients. The swift advancements in AI, particularly in generative AI and large language models, have sparked renewed conversations about how they might influence the healthcare sector, especially with regard to the roles of healthcare professionals. [12]

AI's influence is evident in various aspects of healthcare, including the detection of medical conditions in images, early diagnosis of COVID-19 to control its spread, offering virtual patient care through AI-driven tools, the management of electronic health records, enhancing patient involvement and adherence to treatment, easing the administrative burden on healthcare professionals, uncovering new drugs and vaccines, identifying errors in medical prescriptions, extensive data storage and analysis, and aiding in technology-assisted rehabilitation.

With the emergence of COVID-19 and its impact on global healthcare, AI has brought about a revolutionary transformation in the field. This revolution could represent a significant stride towards meeting future healthcare requirements. [13] Machine learning offers a versatile set of tools suitable for deployment at any stage of a pandemic. In the context of studying disease processes, where vast amounts of data are generated, machine learning accelerates the analysis and swift recognition of patterns that would otherwise require an extended period using conventional mathematical and statistical methods.

Its adaptability, capacity to evolve based on evolving insights into the disease, self-improvement with the availability of new data, and impartial approach to analysis make machine learning an exceptionally flexible and innovative instrument for managing emerging infections. Nevertheless, as machine learning excels in deriving insights from extensive data, it demands stringent quality control throughout data collection, storage, and processing. Standardizing data structures across diverse populations becomes crucial to enable these systems to adapt and learn from data worldwide, a capability previously unattainable but of utmost significance in addressing a global pandemic like COVID-19.

Furthermore, considering the inherent data variability in the early stages of a new pandemic, exercising caution is paramount when feeding such raw and outlier-rich data into AI algorithms. [14] The proper oversight of AI applications plays a pivotal role in ensuring patient safety, accountability, and in gaining the trust and support of healthcare professionals to harness its potential for significant health benefits. Effective governance is essential to navigating regulatory, ethical, and trust-related challenges while promoting the acceptance and integration of AI. [13]

The rapid expansion of our knowledge and information resources, alongside the emerging diagnostic and treatment possibilities, presents medicine with the dilemma of either condensing this information to make it manageable or utilizing it fully and optimally for the benefit of patients and society. In the present day, doctors find themselves under rising pressure to keep up with the constantly evolving landscape of scientific and technological advancements, all while navigating economic constraints and upholding the principles of compassionate healthcare.

This juggling act can sometimes push them to their limits. Machine learning, as the leading frontier in AI today, emulates human learning and, based on the quality of data and computational resources, has the potential to offer increasingly accurate medical predictions and categorizations. Current evidence indicates that AI support can yield growing advantages in preventive, diagnostic, and therapeutic patient care. But, any technology that indirectly affects medical practice and, by extension, people's health, diseases, and mortality rates, must undergo meticulous evaluation regarding its benefits and potential drawbacks.

During the learning and application phases of machine learning, risks may emerge due to potential biases, adverse feedback loops, and errors. Therefore, AI applications present a potential hazard to patients and should continue to be subjected to rigorous scrutiny through human judgment. A broad set of skills is needed to evaluate the quality of AI applications in healthcare. These skills encompass various areas, including medical expertise, law, the design of care processes, ethics, data science, and computer science. Because not all of these aspects fall within the specific domain of medicine, it is crucial for the medical profession to develop a deep understanding of AI.

This understanding is essential for them to responsibly integrate AI into patient care while considering its impact critically and fulfilling their social responsibility. Given the intricate nature of AI applications and their inclination to lack transparency, there is a necessity for regulatory measures that prioritize tangible advantages, the associated risks linked to specific applications, and the assurance of transparent content. When employed responsibly, AI has the potential to advance evidence-based and cost-effective patient care in the future, all while preserving the fundamental human aspects and intelligence within the field of medicine. [3]

References

1. Bajwa J, Munir U, Nori A, Williams B. Artificial intelligence in healthcare: transforming the practice of medicine. Future Healthcare Journal. 2021 Jul;8(2):e188-e194.

2. Amisha, Malik P, Pathania M, Rathaur VK. Overview of artificial intelligence in medicine. Journal of Family Medicine and Primary Care. 2019 Jul;8(7):2328-2331.

3. Wehkamp K, Krawczak M, Schreiber S. The Quality and Utility of Artificial Intelligence in Patient Care. Deutsches Ärzteblatt International. 2023 Jul 10;120.

4. Nichols JA, Herbert Chan HW, Baker MAB. Machine learning: applications of artificial intelligence to imaging and diagnosis. Biophysical Reviews. 2019 Feb;11(1):111-118.

5. Cichy RM, Kaiser D. Deep Neural Networks as Scientific Models. Trends in Cognitive Sciences. 2019 Apr;23(4):305-317.

6. Khan B, Fatima H, Qureshi A, Kumar S, Hanan A, Hussain J et al. Drawbacks of artificial intelligence and their potential solutions in the healthcare sector. Biomedical Materials & Devices. 2023 Feb 8:1-8.

7. Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthcare Journal. 2019 Jun;6(2):94-98.

8. Noorbakhsh-Sabet N, Zand R, Zhang Y, Abedi V. Artificial intelligence transforms the future of health care. The American Journal of Medicine. 2019 Jul 1;132(7):795-801.

9. Soriano-Valdez D, Pelaez-Ballestas I, Manrique de Lara A, Gastelum-Strozzi A. The basics of data, big data, and machine learning in clinical practice. Clinical Rheumatology. 2021 Jan;40(1):11-23.

10. Romero RA, Young SD. Ethical Perspectives in Sharing Digital Data for Public Health Surveillance Before and Shortly After the Onset of the COVID-19 Pandemic. Ethics Behav. 2022;32(1):22-31.

11. Lehne M, Sass J, Essenwanger A, Schepers J, Thun S. Why digital medicine depends on interoperability. NPJ Digital Medicine. 2019 Aug 20;2:79.

12. Sezgin E. Artificial intelligence in healthcare: Complementing, not replacing, doctors and healthcare providers. Digital Health. 2023 Jul 2;9:20552076231186520.

13. Al Kuwaiti A, Nazer K, Al-Reedy A, Al-Shehri S, Al-Muhanna A, Subbarayalu AV et al. A Review of the Role of Artificial Intelligence in Healthcare. Journal of Personalized Medicine. 2023 Jun 5;13(6):951.

14. Bansal A, Padappayil RP, Garg C, Singal A, Gupta M, Klein A. Utility of artificial intelligence amidst the COVID 19 pandemic: a review. Journal of Medical Systems. 2020 Sep;44:1-6.

Comments (0)